机器学习需要考虑数据中存在的偏差,使用Gradient boosting算法为Allstate进行索赔预测。

Requirement

First Option

Machine learning is known to include bias captured in the data. For example, most natural language processing has suggested doctors as male and nurses as female. This bias creates discrimination implicitly, even then algorithm excludes the variable in modeling, due to the exposure distribution. In this task, you are asked to,

- A) Create gradient boosting algorithm for Allstate claim prediction competition. https://www.kaggle.com/c/ClaimPredictionChallenge/data

- B) Introduce 3 bias assessment measures to rank the biasness of each variable. Please check https://towardsdatascience.com/machine-learning-and-discrimination-2ed1a8b01038 for reference

- C) Identify the 3 most biased variables and illustrate the measure with support of visualization

- D) Propose1methodtoreduceorremovebiasof2variablessimultaneously.By using the same measures, share the improvement.

- E) Prepare a technical report to illustrate you modeling and findings from A) to D)

Second Option

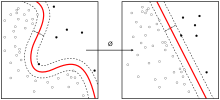

Gradient boosting with both tree and linear base learners: xgboost and lightgbm are the most popular boosting libraries for data scientists. However, most of the applications presumes the use of tree as base learners. Other base learners, listed below, are rarely utilized.

xgboost has included linear predictor as a base learner option. (by setting booster=”gblinear” in parameter). However, the existing library does not allow both tree and linear predictor to estimate parameters in the same model run. In this assignment, you need to modify the source codes of lightgbm package (https://github.com/microsoft/LightGBM):

- A) Includes a booster similar to gblinear from xgboost. The module should allow users to train linear booster and predict from admissible dataset. You can safely assume the dataset to be fully numeric and treating missing values as zeros. (Tips: a new linear module should reside in LightGBM/src/boosting/ folder)

- B) Enable the library to call different boosting at each iteration. For example, in a 500-iteration run, the model select tree (gbdt) in the first iteration and linear in the 2nd and 3rd. The flow should be in each iteration, there is a base learner in the 2 assignment mechanism given by a probability parameter provided by users. In each iteration, the algorithm should first generate a random number so that the base learners will be assigned with the appropriate probability. You can safely assume gbdt and linear are the only members.

- C) The resulting booster (called gbdt_and_linear) should have the class functions and object as gbdt. i.e., training, predict, calculating metrics etc.

- D) Write a python/R code to train the algorithm for Allstate claim prediction competition and make one submission. Data can be found in https://www.kaggle.com/c/ClaimPredictionChallenge/data