使用SHAP库,编写解释分类器,并进行特征选择。

Objectives

This assignment consists of written problems and programming exercises on transparency and explanations.

The programming part of this assignment focuses on explaining classifiers, and on doing feature selection based on a set of explanations. You will use the open-source SHAP library to complete the programming portion of this assignment. We encourage you to carefully read the paper describing SHAP, which we discussed in class.

After completing this assignment, you will:

- Understand how to use SHAP to generate locally interpretable explanations of classification decisions on a text corpus.

- Learn how to use local explanations for feature selection, ultimately improving classification accuracy on a text corpus.

You must work on this assignment individually. If you have questions about this assignment, please send a message to “All Instructors in this site” through NYU Classes.

Grading

The homework is worth 60 points, or 10% of the course grade. You are allotted 2 (two) late days over the term, which you may use on a single homework, or on two homeworks, or not at all. If an assignment is submitted at most 24 hours late – one day is used in full; if it’s submitted between 24 and 48 hours late – two days are used in full.

Your grade for the programming portion will be significantly impacted by the quality of your written report. Your report should be checked for spelling and grammar, and it should include all information on which you want to be graded. You will receive no credit for plots that do not appear in your report. All plots should be readable: with appropriate axis labels and readable font size. You should discuss the plots in your report, carefully explaining your observations.

Submission instructions

Provide written answers to Problems 1 and 2 in a single PDF file created using LaTeX. (If you are new to LaTeX, Overleaf is an easy way to get started.) Provide code in answer to Problem 2 in a Google Colaboratory notebook. Both the PDF and the notebook should be turned in as Homework 3 on BrightSpace. Please clearly label each part of each question.

Problem 1: AI Ethics: Global Perspectives

In this part of the assignment, you will watch a lecture from the AI Ethics: Global Perspectives course and write a memo (500 words maximum) reflecting on issues raised in the lecture. You can watch either:

- “Content Moderation in Social Media and AI” (watch the lecture)

- “Indigenous Data Sovereignty” (watch the lecture)

- “Do Carebots Care? The Ethics of Social Robots in Care Settings” (watch the lecture)

- “The Intersection of AI and Consumer Protection” (watch the lecture)

- “Data Protection & AI” (watch the lecture)

If you have not already registered, please register for the course at https://aiethicscourse.org/contact.html, specify “student” as your position/title, “New York University” as your organization, and enter the course number, DS-GA 1017, in the message box.

In your memo, you should discuss the following:

- Identify the stakeholders. In particular, which organizations, populations, or groups could be impacted by the data science issues discussed in the lecture? How could the data science application benefit the population(s) or group(s)? How could the population(s) or group(s) be adversely affected?

- Identify and describe an issue relating to data protection or data sharing raised in the lecture.

- Which vendor(s) owns the data and/or determines how the data is shared or used?

- To what extent is the privacy of users or persons represented in the data being protected? Is the data protection adequate?

- How does transparency and interpretability, or a lack thereof, affect users or other stakeholders? Are there black boxes?

- What incentives does the vendor (e.g., the data owner, company, or platform) have to ensure data protection, transparency, and fairness? How do these incentives shape the vendor’s behavior?

Problem 2: Generating Explanations with SHAP

For the programming portion of this assignment, we will use a subset of the text corpus from the 20 newsgroups dataset. This is the dataset used in the LIME paper to generate the Christianity/Atheism classifier, and to illustrate the concepts. However, rather than explaining predictions of a classifier with LIME, we will use this dataset to explain predictions with SHAP.

- (a) Use the provided Colab template notebook to import the 20 newsgroups dataset from sklearn.datasets, importing the same two-class subset as was used in the LIME paper: Atheism and Christianity. Use the provided code to fetch the data, split it into training and test sets, then fit a TF-IDF vectorizer to the data, and train a SGDClassifier classifier.

- (b) Generate a confusion matrix (hint: use sklearn.metrics.confusion_matrix) to evaluate the accuracy of the classifier. The confusion matrix should contain a count of correct Christian, correct Atheist, incorrect Christian, and incorrect Atheist predictions. Use SHAP’s explainer to generate visual explanations for any 5 documents in the test set. The documents you select should include some correctly classified and some misclassified documents.

- (c) Use SHAP’s explainer to study mis-classified documents, and the features (words) that contributed to their misclassification, by taking the following steps:

- Report the accuracy of the classifier, as well as the number of misclassified documents.

- For a document doc_i let us denote by conf_i the difference between the probabilities of the two predicted classes for that document. Generate a chart that shows conf_i for all misclassified documents (which, for misclassified documents, represents the magnitude of the error). Use any chart type you find appropriate to give a good sense of the distribution of errors.

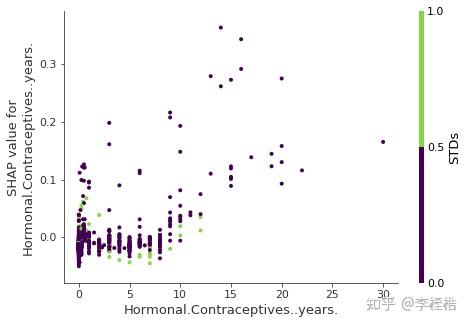

- Identify all words that contributed to the misclassification of documents. (Naturally, some words will be implicated for multiple documents.) For each word (call it word_j), compute (a) the number of documents it helped misclassify (call is count_j) and (b) the total weight of that word in all documents it helped misclassify (weight_j) (sum of absolute values of weight_j for each misclassified document). The reason to use absolute values is that SHAP assigns a positive or a negative sign to weight_j depending on the class to which word_j is contributing. Plot the distribution of count_j and weight_j, and discuss your observations in the report.

- (d) In this problem, you will propose a feature selection method that uses SHAP explanations. The aim of feature selection is to improve the accuracy of the classifier.

- Implement a strategy for feature selection, based on what you observed in Problem 3(c). Report the accuracy of the classifier after feature selection. Describe your feature selection strategy and the results in your report.

- Show at least one example of a document that was misclassified before feature selection and that is correctly classified after feature selection. In your report, discuss how the explanation for this example has changed.

Hint: As you are designing your feature selection strategy, consider removing some features (words) from the training set.